In the industrial sector, onsemi enables OEMs to develop next‑generation systems that meet the performance, efficiency, and reliability demands of Industry 4.0. Our technologies drive advancements across Energy Infrastructure, Industrial Automation and Smart Building and City applications. With wide‑bandgap SiC and GaN devices, high‑performance MOSFETs, IGBTs, and advanced gate drivers, we deliver high‑efficiency power conversion solutions for demanding industrial environments.

In industrial automation, onsemi provides precise motor control, industrial‑grade image sensors, depth‑sensing, and robust connectivity that support high‑accuracy machine vision, robotics, automated material handling, and predictive maintenance enabling fully connected, data‑driven manufacturing. Across energy infrastructure, our SiC power modules and high‑efficiency conversion platforms power solar inverters, energy storage, smart‑grid stages, and high‑power EV charging, increasing system efficiency and long‑term reliability.

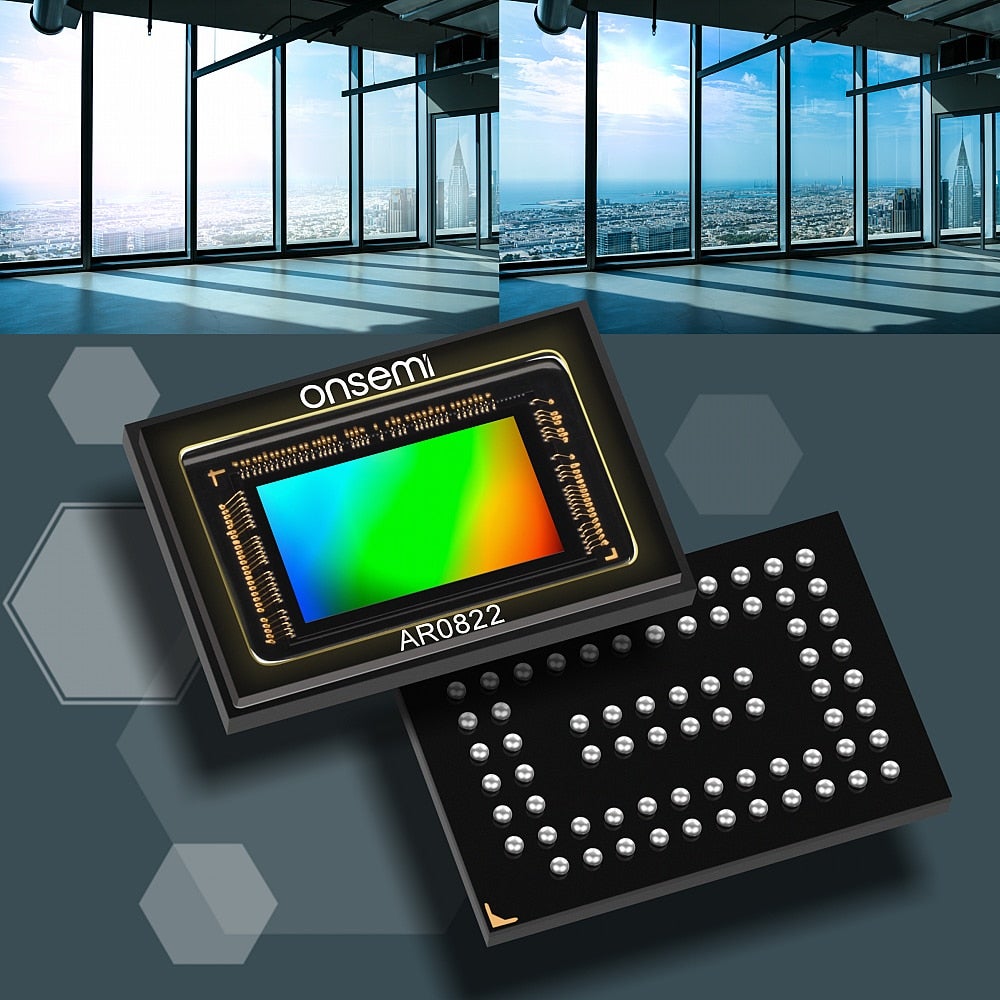

Smart building ecosystems benefit from onsemi’s motor drivers and power devices for heat pumps and HVAC optimization, industrial‑grade imaging for security and surveillance cameras, and compact, high‑efficiency power solutions for small EVs and battery‑powered tools. Secure connectivity integrates these systems into building automation frameworks that reduce energy consumption and enhance safety. In smart cities, onsemi imaging and low‑power connectivity support public safety monitoring, traffic analytics, and distributed environmental sensing delivering scalable, reliable urban‑infrastructure solutions.

With decades of expertise, onsemi helps OEMs reduce energy consumption, enhance system reliability, and accelerate the adoption of sustainable, connected technologies shaping a cleaner, smarter, and more resilient future.