Drones have become pervasive in entertainment (TV show/film making), hobbyist photography and just as a fun toy. They are increasingly used in inspection, logistics/delivery, security and surveillance, and other industrial use cases, due to their ability to access hard-to-reach areas. However, did you know that the most critical component enabling a drone’s operation is its vision system? Before diving deeper into this topic, let’s explore what drones are, their diverse applications, and why they have surged in popularity. Finally, we’ll discuss how onsemi is transforming the vision systems that power these incredible flying objects.

Types & Applications

Drones are unmanned aerial vehicles (UAV), also called unmanned aerial systems (UAS), and to a lesser extent remotely piloted aircrafts (RPA). They can operate without the need to be driven and navigate autonomously using a variety of systems.

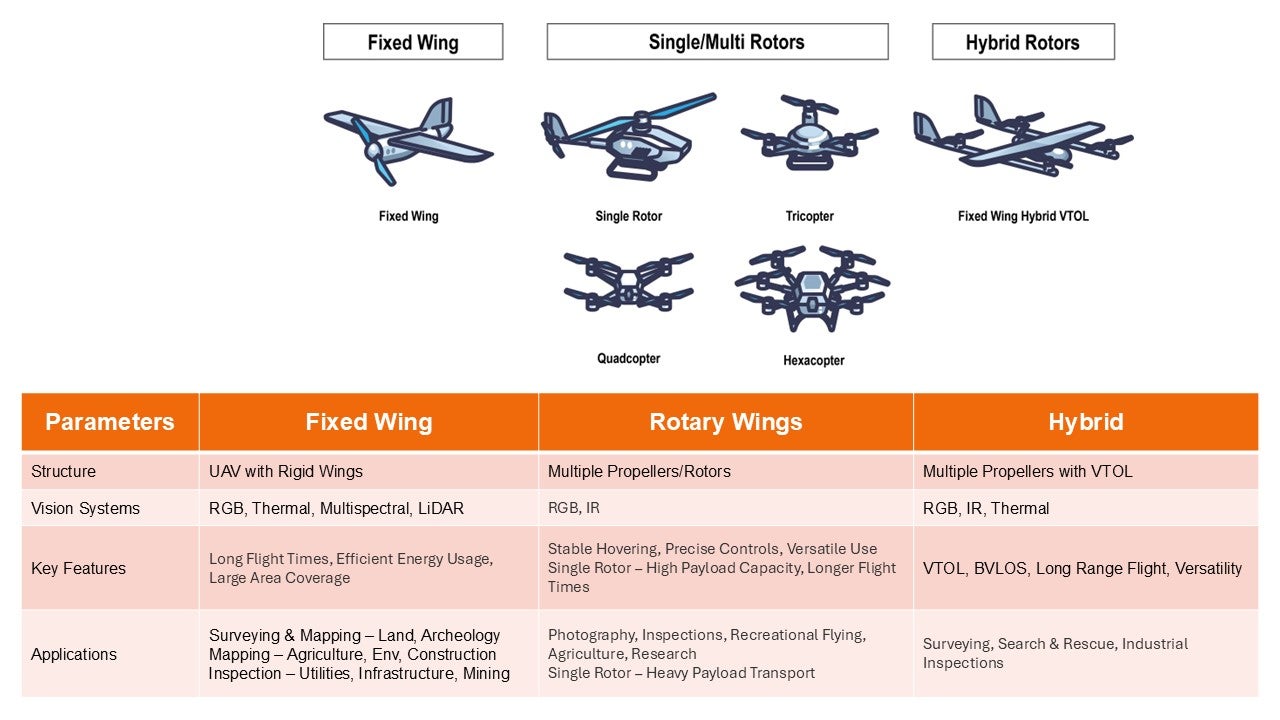

There are three types of drones – Fixed Wing, Single Rotor/Multi-Rotor and Hybrid. Each serves a different purpose, and each type is aligned with the intended purpose for which it is built.

Fixed Wings are typically used for heavier payload transport, longer flight times and are deployed in intelligence, surveillance and reconnaissance (ISR) missions, combat operations and loitering munitions, mapping and research activities to mention a few.

Single-/Multi-Rotors have the dominant usage, with a wide variety of industrial focuses that range from regular warehouses to inspections and even as delivery vehicles. The purpose of these types can be varied as they can be deployed in a wide variety of use cases, and demand highly optimized electro-mechanical solutions.

Hybrid Rotors incorporate the best of both types above, and have a vertical take-off and landing (VTOL) ability that make it versatile, specifically in space-constrained regions. Most delivery drones leverage these capabilities for obvious reasons.

Motion & Navigation Systems in Drones

Drones carry a multitude of sensors for motion and navigation, including accelerometers, gyroscopes and magnetometers (collectively referred to as an inertial measurement unit, or IMU), barometers and more. They use a variety of algorithms and techniques like optical flow (assisted with depth sensors), simultaneous localization and mapping (SLAM) and visual odometry. While these sensors perform their functions well, they can struggle to achieve the required accuracy and precision at affordable costs and optimal sizes. The issue is further aggravated during longer flight times, leading to the need for expensive batteries or limiting flight times based on battery charge cycles.

Vision Systems in Drones

Image sensors supplement the above sensors with significant operational enhancements resulting in a high-accuracy, high-precision machine. These are available in two entities – Gimbals (often referred to as payloads as well) and Vision Navigation Systems (VNS).

Gimbals* – provide first person view (FPV); they generally constitute different types of image sensors spanning across the wide electromagnetic spectrum (ultraviolet in exceptional cases, regular CMOS image sensors over 300nm – 1000nm, short-wave infra-red (SWIR) sensors extending to 2000nm and beyond 2000nm with medium-wave (MWIR) and long-wave infra-red (LWIR) sensors.

Vision Navigation Systems (VNS) – provide navigation guidance, object and collision avoidance; they are generally made up of inexpensive low resolution image sensors and together with the IMU and sensors data, use computer vision techniques to create a comprehensive solution for autonomous navigation.

Vision Systems’ Importance

Drones operate both in indoor and outdoor conditions as seen in usage and applications described earlier. These conditions can be significantly challenging with wide ranging lighting variances and visibility limitations in dust, fog, smoke, and pitch-black environments. These systems attempt to leverage significant artificial intelligence (AI) and machine learning (ML) algorithms applied over image data while using the assistance of the data provided by the techniques previously mentioned, all in the context of operating a highly optimized vehicle that consumes low power and delivers long range distance or extended flight time operations.

It is imperative that the data input into these algorithms is of high-fidelity and highly detailed, yet in certain usage cases, deliver just what is needed thus enabling efficient processing. Training times in AI/ML usage need to be short, and inference needs to be fast with high accuracy and precision. Images need to be of high quality no matter what environment the drone operates in to meet the above requirements.

Sensors that just capture the scene and present it for processing fall significantly short in enabling the high-quality operation of these machines that in most cases will void the very purpose of their deployment. The ability to scale down while still having full details in regions of interest, deliver wide dynamic range to address bright and dark lighting conditions in the same frame, minimize/remove any parasitic effects in images, address dust/fog/smoke filled view fields and assist these images with high depth resolution deliver tremendous benefits to making UAVs a highly optimized machine.

These capabilities minimize the magnitude of resources – processing cores, GPUs, on-chip or outside of the chip memory, bus architectures and power management – required in reconstructing and analysis of these images and hastening the decision-making process. This also reduces the BOM cost of the overall system, especially when we consider today’s UAVs easily can host more than 10 image sensors. Alternately, for the same set of resources, more analysis and complex algorithms to help effective decision making can be made possible thus making the UAV differentiated in this crowded field.

onsemi is the technology leader in sensing, contributing significant innovations to vision systems solutions and offering a comprehensive set of image sensors to address the needs of gimbals and VNS. The Hyperlux LP, Hyperlux LH, Hyperlux SG, Hyperlux ID and SWIR product families have incorporated considerable technologies and features that address the needs of drone vision systems exhaustively. Drone manufacturers can now obtain their vision sensors from a single American source that is NDAA compliant.

Learn more about onsemi image and depth sensors in the below resources:

* Gimbals technically refer to the mechanism that carries and stabilizes the specific payloads. However, often the combined assembly is called Gimbal.