Image sensors are becoming more prevalent, especially in security, industrial and automotive applications. Many vehicles now feature five or more image sensor-based cameras. However, image sensor technology differs from standard semiconductor technology, often leading to misconceptions.

Moore’s Law and Image Sensors

Some people assume that the famous ‘Moore’s Law’ should apply to image sensors. Based upon observations by Gordon Moore (founder of Fairchild Semiconductor—now part of onsemi), the number of transistors in an integrated circuit (IC) doubles every two years. Shrinking the transistor was the primary enabler of putting twice as many on a device. This trend has continued for decades, although transistor growth has slowed recently. The other effect of this increasing transistor density was a decrease in the cost per transistor, so many electronic systems got more functionality over time, without increasing prices.

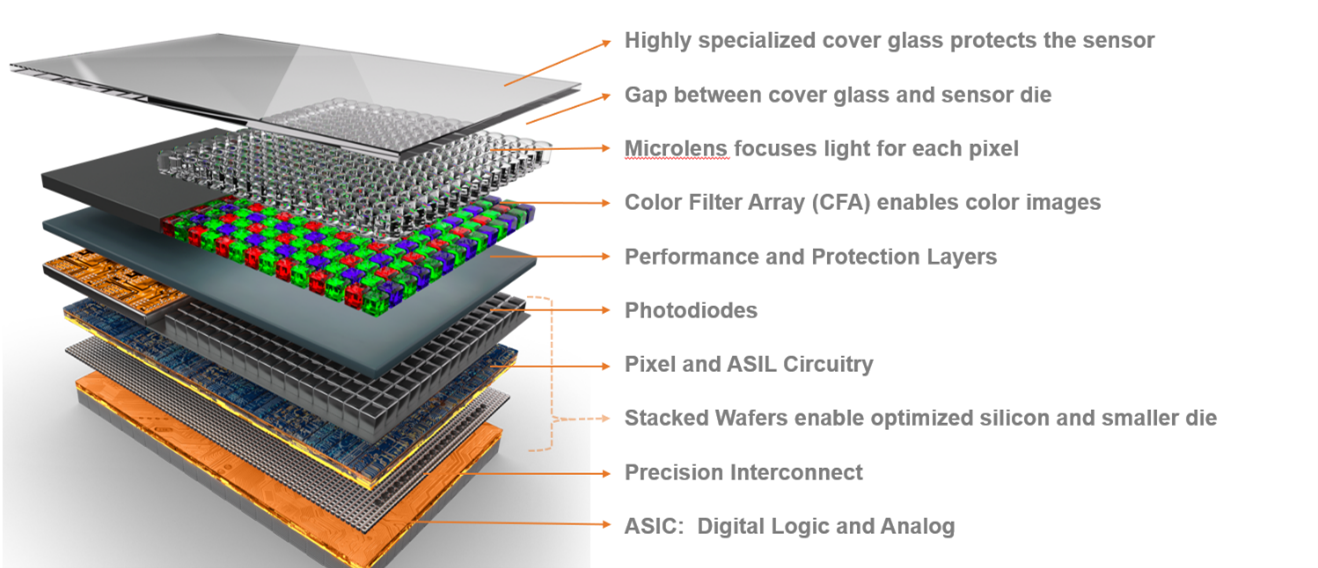

Image sensors are different because some of the most important parts of the device do not scale as transistors get smaller. Specifically, the optical elements of image sensors such as photodiodes, where incoming light is converted to electrical signal, and some of the analog elements to convert that electrical signal into a digital picture simply do not scale as easily as digital logic scales. In a sensor, the image capture is primarily analog, while digital circuitry handles converting digital data from each pixel into an image that can be stored, displayed or used for AI machine vision.

If pixel count were to double every two years while keeping the lens size the same, the pixels would be smaller and therefore receive fewer photons. (Think of a bucket on a rainy day….a smaller bucket will collect fewer raindrops). So, the sensor must perform better on sensitivity per unit area and lower noise to produce an equally good image in low light. Therefore, increasing the number of pixels without an application need does not make sense, especially as it requires increases in bandwidth and storage that increase costs elsewhere in the system.

Pixel Size

Pixel size alone is not sufficient to understand pixel performance. We cannot automatically assume that a physically larger pixel necessarily correlates to better image quality. While pixel performance across different light conditions is important, and larger pixels have more area to gather the available light, this does not always lead to enhanced image quality. Multiple factors are equally critical, including the resolution and pixel noise metrics.

A sensor incorporating smaller pixels can outperform a sensor with larger pixels covering the same optical area if the benefits of higher resolution have more impact on the application than the lower number of photons per pixel due to the smaller area per pixel. What is important is that the amount of light is sufficient to form a quality image so both pixel sensitivity (is it efficient converting photons to electrical signal?) and the lighting conditions are important.

Pixel size is a factor in selecting a sensor for an application. However, one can overstate its importance; it is just one parameter among several others that merits equal consideration. When choosing a sensor, designers must consider all requirements of the target application and then determine the ideal balance of speed, sensitivity, and image quality to achieve a suitable design solution.

Split-Pixel Design

Maximizing dynamic range is valuable in many applications to render shadows and highlights correctly in the final image, and this can be challenging for image sensors. A technique known as ‘split pixel’ is used by some companies to avoid the challenge of creating more capacity for the photodiode to collect electrons before it “gets full.”

With the split-pixel approach, the sensor area dedicated to a single pixel is divided into two parts: a larger photodiode covers most of the area and a smaller photodiode uses the remainder. The larger photodiode collects more photons and is susceptible to saturation in bright conditions. Likewise, the smaller photodiode can be exposed for a longer duration and not saturate as less area is available to gather photons. You can visualize this as by comparing a bucket to a bottle if your goal is collecting raindrops. A bucket typically has a wider top than its base…so it will collect rain very efficiently but will fill up more quickly than a bottle that has a small opening and a wider body. By using the larger pixel in low-light conditions and the smaller pixel in bright conditions, it is possible to create an extended dynamic range.

At onsemi, we overcome this by adding an area to the pixel where the signal or charge can overflow—imagine we use our bucket for catching rain drops, but we have a larger area to hold water if the bucket overflows. The “bucket” signal is easily read with very high accuracy, so we achieve excellent low-light performance, and the overflow basin contains everything that overflowed, thereby extending the dynamic range. So, the entire pixel area is used for low-light conditions without saturation in brighter conditions. Saturation would degrade image quality such as color and sharpness. As a result, onsemi’s Super-Exposure technology delivers better image quality across high dynamic range scenes for human and machine vision applications.

The next time you need to select an image sensor for your design, remember, ‘more and bigger is not always better’—at least as far as pixels are concerned.